2021 FDA Science Forum

High Performance Computing Techniques for Big Data Processing

- Authors:

- Center:

-

Contributing OfficeCenter for Devices and Radiological Health

Abstract

Background

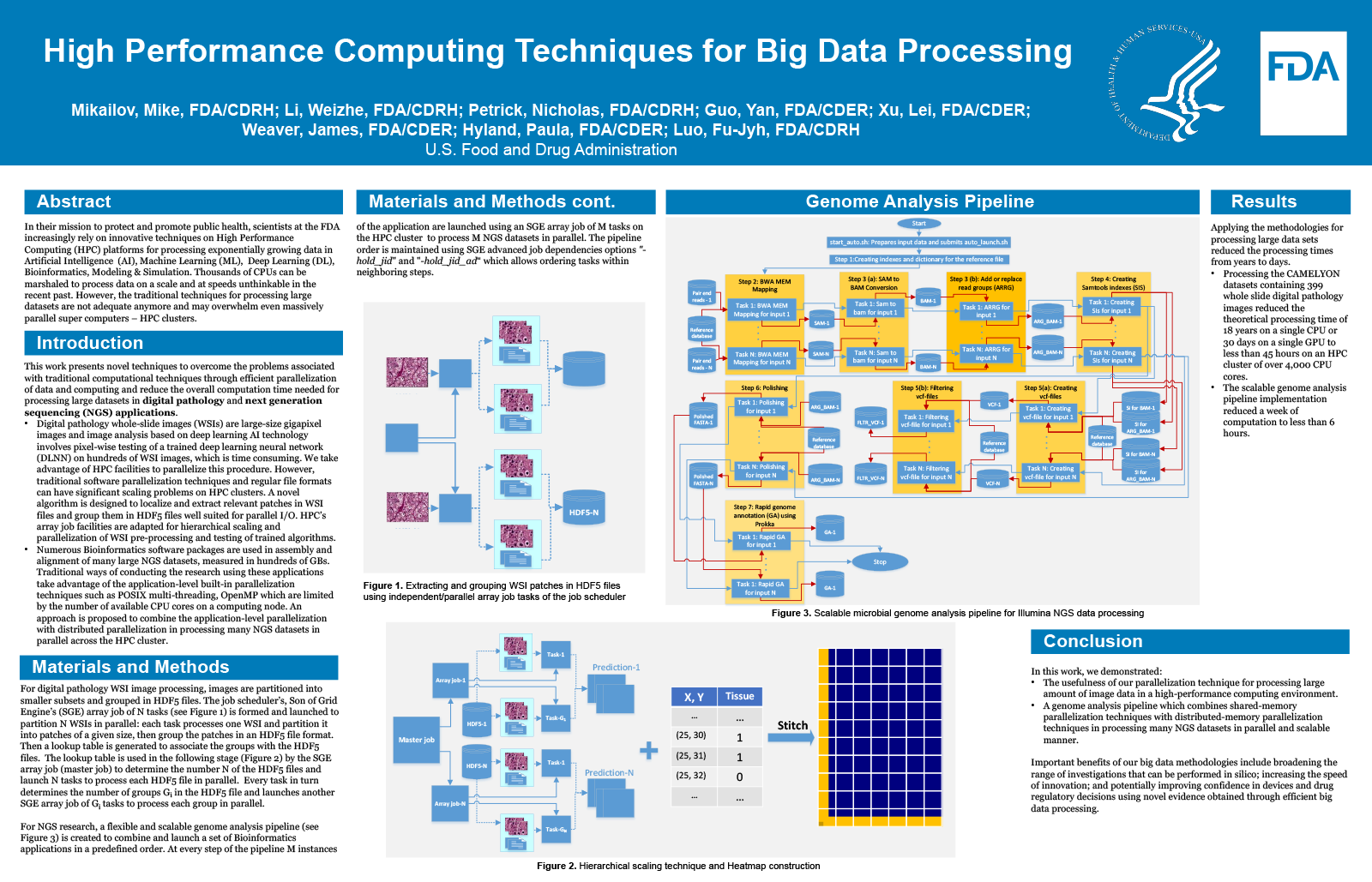

In their mission to protect and promote public health, scientists at the FDA increasingly rely on innovative techniques on High Performance Computing (HPC) platforms for processing exponentially growing data in AI/ML/DL, Bioinformatics, Modeling & Simulation. Thousands of CPUs can be marshaled to process data on a scale and at speeds unthinkable in the recent past. However, the traditional techniques for processing large data sets are not adequate anymore and may overwhelm even massively parallel super computers – HPC clusters.

Purpose

To overcome the problems associated with traditional computational techniques through efficient parallelization of data and computing and reduce the overall computation time needed for processing large data sets.

Methodology

Data is partitioned into smaller subsets and grouped using a Hierarchical Data Format 5 files and massively parallel supercomputing techniques are applied to large scale computations such as a scalable genome analysis pipeline to fully automate Next Generation Sequencing data processing.

Results

Applying the methodologies for processing large data sets reduced the processing times from years to days. For example, the scalable genome analysis pipeline implementation reduced a week of computation to less than 6 hours. Likewise, applying these techniques to a deep learning neural network processing the CAMELYON datasets containing 399 whole slide digital pathology images reduced the theoretical processing time of 18 years on a single CPU or 30 days on a single GPU to less than 45 hours on an HPC cluster of over 4,000 CPU cores.

Conclusion

Important benefits of our big data methodologies include: broadening the range of investigations that can be performed in silico; increasing the speed of innovation; and potentially improving confidence in devices and drug regulatory decisions using novel evidence obtained through efficient big data processing.